Project

🎆 G.A.I.A. 🎆

General Artificial Intelligence Architecture.

🦋

Exploration in Neural Network Design and Mapping

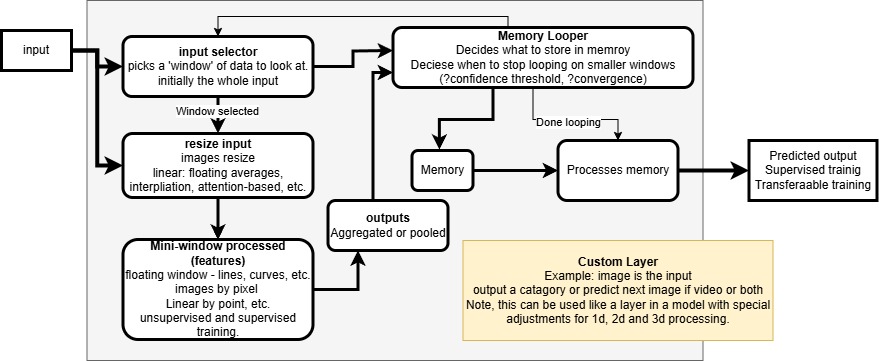

GAIA is a custom neural network architecture designed to emulate human-like focus, attention, and memory. By iteratively analyzing complex inputs such as images, GAIA identifies regions of interest, dynamically resizes them, extracts both supervised and unsupervised features, and integrates this data across multiple cycles to produce context-aware, intelligent predictions.

🧠 Overview & ⚙️Technical Highlights

As the next generation of artificial intelligence systems emerges, the architecture behind them becomes critical. GAIA explores large-scale, modular AI structures with components like:

- Vectorized memory systems

- Dynamic window attention mechanisms

- Floating-window CNNs

- Dual-mode learning (unsupervised & supervised)

- Memory-guided decision loops

This architecture builds on concepts like deep feature abstraction and multi-pass attention, combining them into a flexible, trainable neural core. Rather than relying solely on pre-defined layers, GAIA introduces custom Keras layers with novel behaviors such as bell-curve activations, external training tracks, and adaptive memory storage.

✅ Below is the first published schematic of the GAIA model (main layer):

👨💻 Creator’s Note

I’ve been exploring neural networks since 1993—when computing power limited their practical use. Fast forward to today, tools like TensorFlow, Keras, PyTorch, and Scikit-learn enable exploration of everything from dense networks to transformers, CNNs, and beyond.

Modern architectures like Large Language Models (LLMs) transform sequences into vector space memory, enabling complex predictions. Parallel networks now handle where to look and what to find—inspired by biological attention systems. With GAIA, I aim to integrate these mechanisms into a cohesive, next-gen general-purpose neural architecture.

🧪 Development Timeline

✅ Phase 1: R&D and Concept Design

- Brainstorming and architecture drafting

- Design of modular flow and component layering

✅ Phase 2: Custom Keras Layers

- Build first custom layer

- Implement external training track

- Create bell-shaped activation function

- Combine bell activation with tracking

- Initial testing and verification

✅ Phase 3: Documentation & Community

- Blog post: Lessons learned during development

- 📷 Video demos and screenshots

- Source code, examples, and modular reuse ideas